Check out our new paper — here — by Jeff Markowitz, Win Gillis and others from the Datta and Sabatini labs (who collaborated on this work) identifying neural codes for 3D behavior in dorsolateral striatum (DLS) that support moment-to-moment action selection.

Previous work from Alex Wiltschko in the lab (here) identified an underlying structure in mouse behavior, in which naturalistic exploration appeared to be divided into sub-second behavioral motifs (which we call syllables) that are expressed probabilistically over time (which we refer to as grammar). To identify behavioral syllables and grammar, Alex developed a behavioral characterization system that combines 3D machine vision and unsupervised, data-driven machine learning called Motion Sequencing, or MoSeq for short.

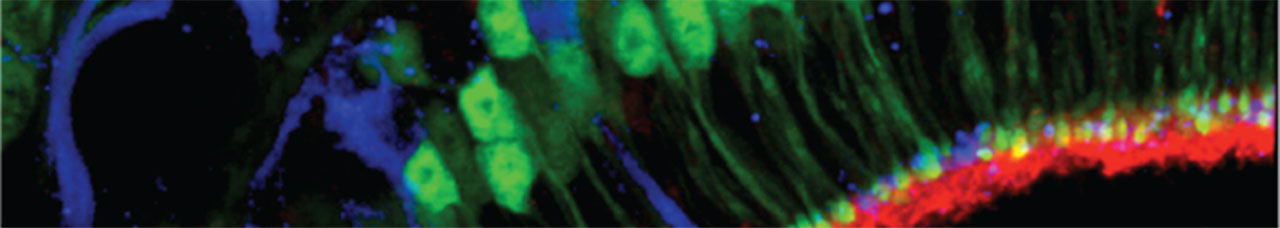

Jeff (who is a joint postdoc between us and Bernardo Sabatini’s lab) and Win had read the fantastic work from the Fentress and Berridge labs demonstrating that the DLS contains neural correlates for behavioral components and sequences that make up naturalistic and spontaneous grooming behaviors (see a subset of this work here, here and here). Based upon these foundational efforts, Jeff and Win hypothesized that the DLS might more generally encode information about spontaneously-expressed 3D behaviors e.g., behavioral syllables and grammar.

To address this hypothesis, they (with a huge assist from our collaborator Scott Linderman) rendered MoSeq compatible with all forms of neural recording. Using this framework, they found that activity in the striatum systematically fluctuates as mice switch from expressing one syllable to the next, that the direct and indirect pathways encode non-redundant and temporally decorrelated information that represents behavioral syllables as they are expressed, that behavioral grammar is explicitly represented in DLS, and that the DLS is required for the appropriate expression of behavioral grammar both during spontaneous locomotory exploration and during odor-guided behaviors. These findings suggest that the DLS selects which 3D behavior to express at the sub-second timescale, and set up future work (ongoing in the lab) to ask how corticostriatal circuits implement syllables and grammar, and to explore how these circuits are sensitive to sensory cues.

This work has been covered by Harvard Magazine here, by the Simons Foundation here, and by What A Year, a website for high school students interested in science, here.